In kubernetes-mixin (a dependency of kube-prometheus-stack and prometheus-operator charts) rules, the severity label of alrets can be critical, warning, or info etc. However, OpsGenie’s priority field only accepts values like P1, P2 … P5.

As a user of both of them, I would have to convert the “severity” to OpsGenie’s “priority”. For example, if a critical alert was fired, a matched P1 alert to be created in OpsGenie is expected, and warning -> P2, info -> P3, as well.

We can use go-template in receivers.*.opsgenie_configs.*.priority of Alertmanager’s config to generate the priority. However it will finnally end with something like:

priority: >-

{{ with .GroupLabels.priority }}

{{ if eq . "critical" }}

P1

{{ else if eq . "warning" }}

P2

{{ else if ... }}

...

{{ end }}

That’s a bit hard to maintain or debug, and I personnaly don’t really like to write tons of go-templates in a short config file, it should be much clearer. Therefore, I prefer the second way - use the relabel_config in Prometheus, instead of doing this in Alertmanager.

If you’re using kube-prometheus-stack (formerly known as prometheus-operator chart), just add these configs in prometheus.prometheusSpec.additionalAlertRelabelConfigs:

additionalAlertRelabelConfigs:

- source_labels: [severity]

target_label: priority

regex: "none" # Seem only the WatchDog alert has "none" severity

replacement: "P5"

- source_labels: [severity]

target_label: priority

regex: "info"

replacement: "P3"

- source_labels: [severity]

target_label: priority

regex: "warning"

replacement: "P2"

- source_labels: [severity]

target_label: priority

regex: "critical"

replacement: "P1"

And use the grouped label in Alertmanager’s priority field:

priority: {{ .GroupLabels.priority }}

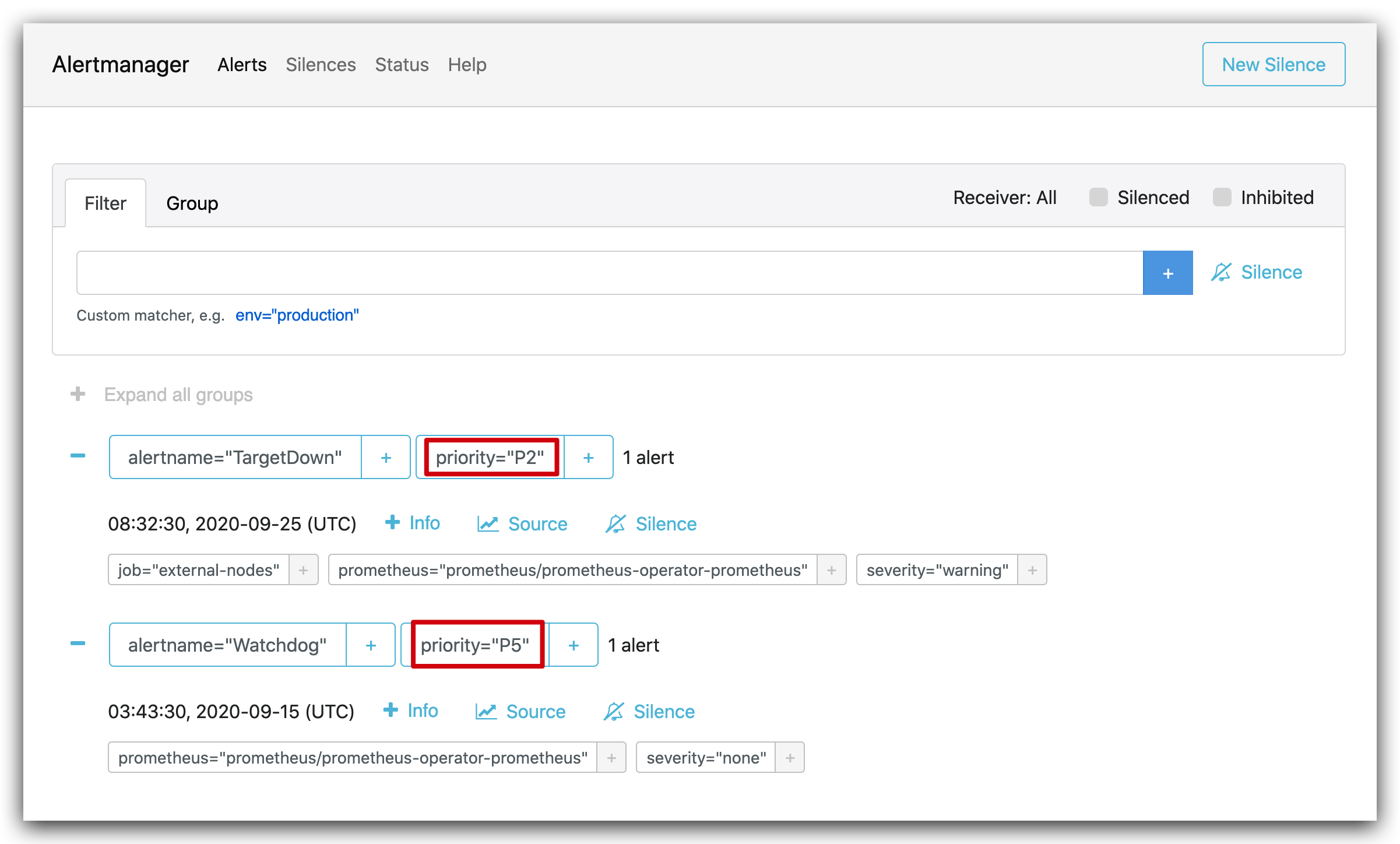

These relabel configs will apply to alerts only, add the priority label according to the value of severity. This also makes it easier to debug - if the alert priority in your OpsGenie dashboard is not correct, just go check out Alertmanager’s dashboard, it shows directly the labels of the alerts included priority sent from Prometheus! But if we made a lot of logic in Alertmanager’s go-template, I can’t seem to find a simple way to get the value of the rendered results in Alertmanager’s templating context.

For more information about alert_relabel_configs, please check out Prometheus official docs.